ConferenceService

The ConferenceService allows an application to manage a conference life cycle and interact with the conference. Using the service, you can create, join, and leave conferences.

For more information about creating and joining conferences, see the Basic Application Concepts guides.

Events

autoplayBlocked

• autoplayBlocked(): void

Emitted when conference participant's audio streams are blocked by a browser's auto-play policy that requires access to the participant's speaker.

When this event occurs, the application requests permission to play the incoming audio stream. After a user interaction (click or touch),

the application calls the playBlockedAudio method to play the audio stream.

example

VoxeetSDK.conference.on("autoplayBlocked", () => {

const button = document.getElementById("unmute_audio");

button.onclick = () => {

VoxeetSDK.conference.playBlockedAudio();

};

});

Returns: void

ended

• ended(): void

Emitted when the replayed conference has ended.

example

VoxeetSDK.conference.on("ended", () => {});

Returns: void

error

• error(error: Error): void

Emitted when WebSocketError, PeerConnectionFailedError, or PeerDisconnectedError occurred.

PeerConnectionFailedError and PeerDisconnectedError are PeerErrors with the failed and disconnected PeerConnectionState value.

example

VoxeetSDK.conference.on("error", (error) => {});

Parameters:

| Name | Type | Description |

|---|---|---|

error | Error | The received error. |

Returns: void

joined

• joined(): void

Emitted when the application has successfully joined the conference.

example

VoxeetSDK.conference.on("joined", () => {});

Returns: void

left

• left(): void

Emitted when the application has left the conference.

example

VoxeetSDK.conference.on("left", () => {});

Returns: void

participantAdded

• participantAdded(participant: Participant): void

Emitted when a new participant is invited to a conference. The SDK does not emit the participantAdded event for the local participant. Listeners only receive the participantAdded events about users; they do not receive events for other listeners. Users receive the participantAdded events about users and do not receive any events about listeners. To notify all application users about the number of participants who are present at a conference, use the activeParticipants event.

example

VoxeetSDK.conference.on("participantAdded", (participant) => {});

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The invited participant who is added to a conference. |

Returns: void

participantUpdated

• participantUpdated(participant: Participant): void

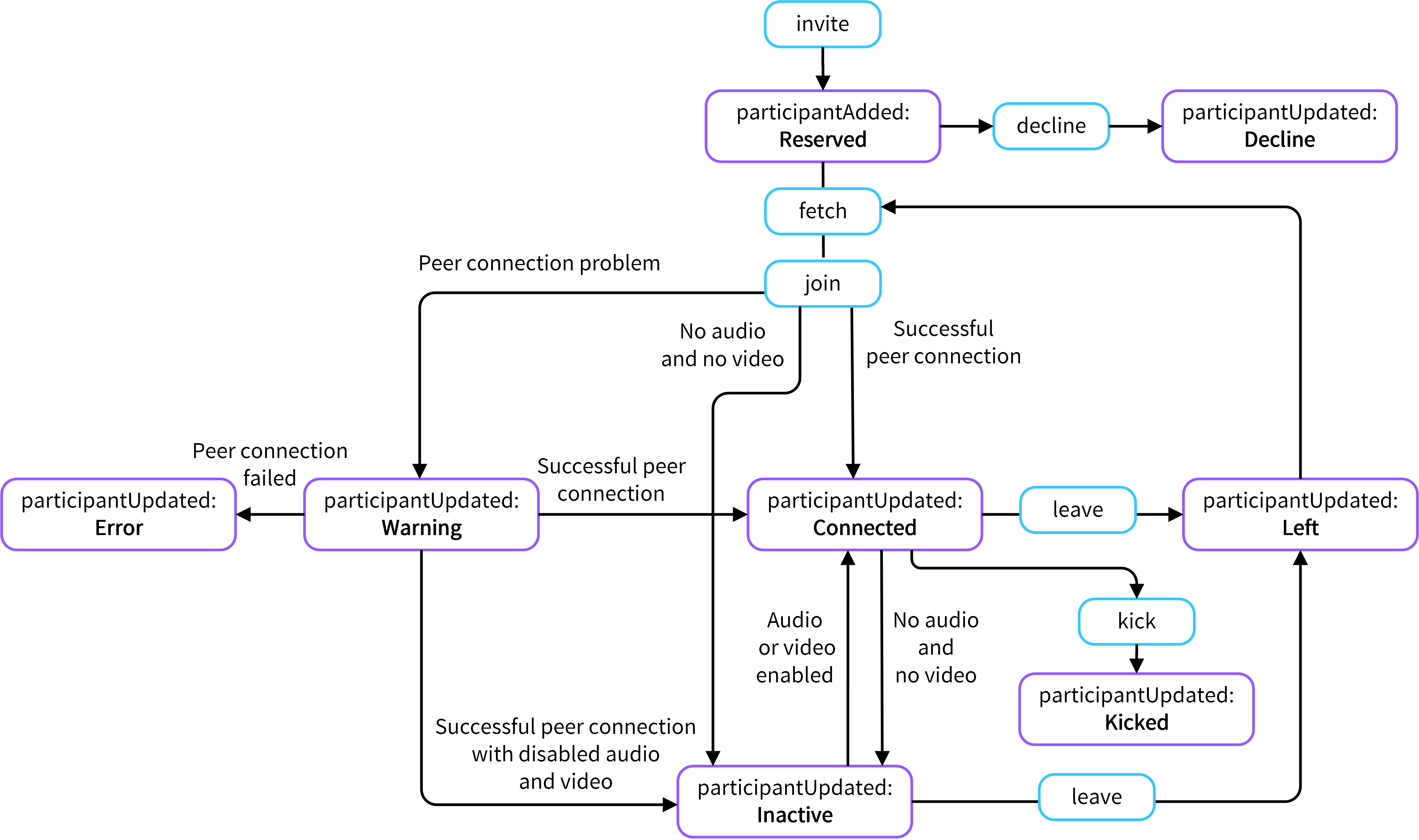

Emitted when a participant changes ParticipantStatus. Listeners only receive the participantUpdated events about users; they do not receive events for other listeners. Users receive the participantUpdated events about users and do not receive any events about listeners. To notify all application users about the number of participants who are present at a conference, use the activeParticipants event.

The following graphic shows possible status changes during a conference:

Diagram that presents the possible status changes.

example

VoxeetSDK.conference.on("participantUpdated", (participant) => {});

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The conference participant who changed status. |

Returns: void

permissionsUpdated

• permissionsUpdated(permissions: Set<ConferencePermission>): void

Emitted when the local participant's permissions are updated.

Parameters:

| Name | Type | Description |

|---|---|---|

permissions | Set<ConferencePermission> | The updated conference permissions. |

Returns: void

qualityIndicators

• qualityIndicators(indicators: Map<string, QualityIndicator>): void

The Mean Opinion Score (MOS) represents the participants' audio and video quality. The SDK calculates the audio and video quality scores and displays the values in a range from 1 to 5, where 1 represents the worst quality and 5 represents the highest quality. In cases when the MOS score is not available, the SDK returns the value -1.

Note:

Mean Opinion Scores (MOS) are available only for participants who use the DVC codec on web clients.

Parameters:

| Name | Type | Description |

|---|---|---|

indicators | Map<string, QualityIndicator> | A map that includes all conference participants' quality indicators. |

Returns: void

streamAdded

• streamAdded(participant: Participant, stream: MediaStreamWithType): void

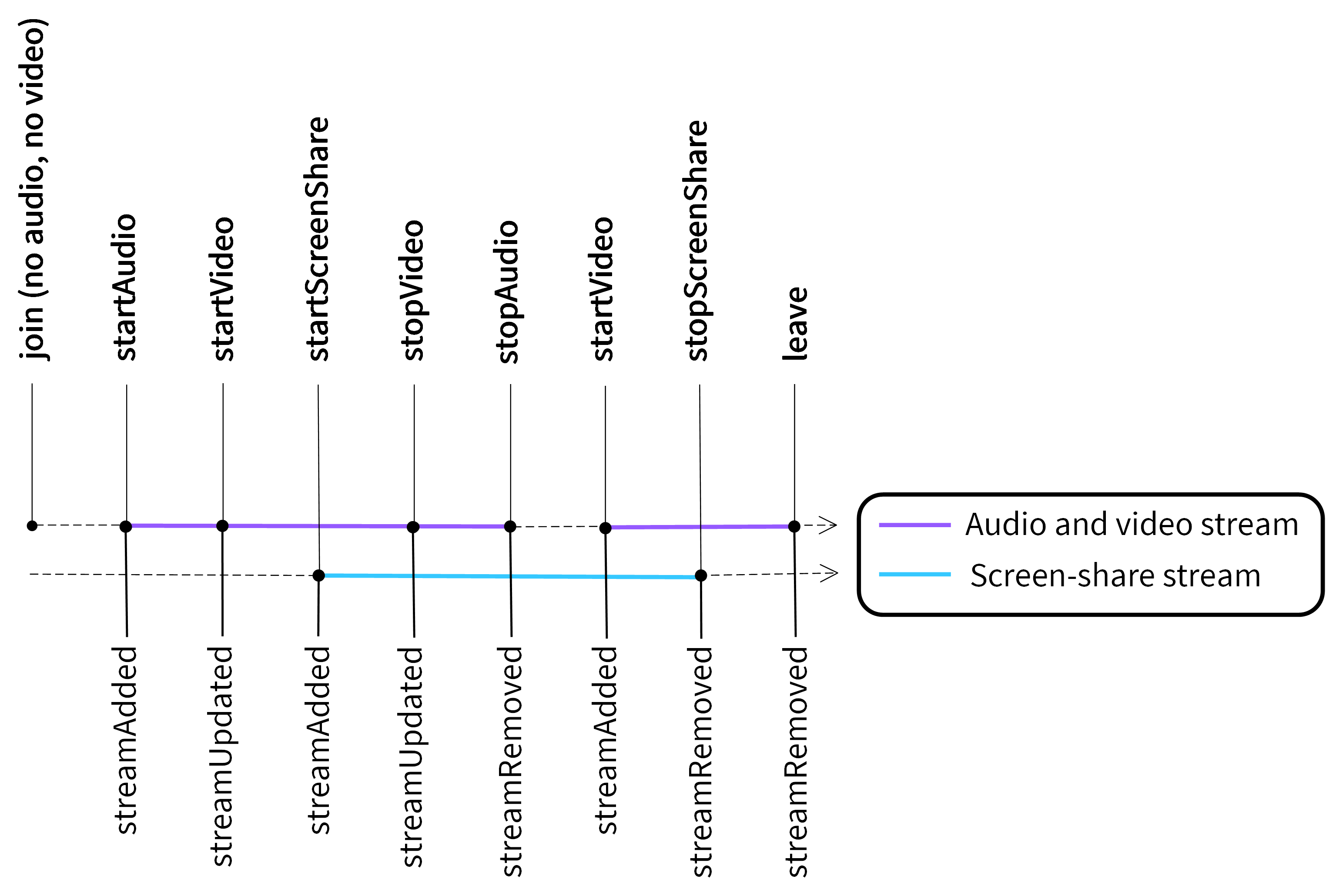

Emitted when the SDK adds a new stream to a conference participant. Each conference participant can be connected to two streams: the audio and video stream and the screen-share stream. If a participant enables audio or video, the SDK adds the audio and video stream to the participant and emits the streamAdded event to all participants. When a participant is connected to the audio and video stream and changes the stream, for example, enables a camera while using a microphone, the SDK updates the audio and video stream and emits the streamUpdated event. When a participant starts sharing a screen, the SDK adds the screen-share stream to this participants and emits the streamAdded event to all participants. The following graphic shows this behavior:

The difference between the streamAdded and streamUpdated events

Based on the stream type, the application chooses to either render a camera view or a screen-share view.

When a new participant joins a conference with enabled audio and video, the SDK emits the streamAdded event that includes audio and video tracks.

The SDK can also emit the streamAdded event only for the local participant. When the local participant uses the stopAudio method to locally mute the selected remote participant who does not use a camera, the local participant receives the streamRemoved event. After using the startAudio method for this remote participant, the local participant receives the streamAdded event.

Note: In Dolby Voice conferences, each conference participant receives only one mixed audio stream from the server. To keep backward compatibility with the customers' implementation, the SDK offers a faked audio track for audio transmission. The faked audio track is included in the streamAdded and streamRemoved events. The SDK takes the audio stream information from the participantAdded and participantUpdated events.

example

VoxeetSDK.conference.on("streamAdded", (participant, stream) => {

var node = document.getElementById("received_video");

navigator.attachMediaStream(node, stream);

});

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The participant whose stream was added to a conference. |

stream | MediaStreamWithType | The added media stream. |

Returns: void

streamRemoved

• streamRemoved(participant: Participant, stream: MediaStreamWithType): void

Emitted when the SDK removes a stream from a conference participant. Each conference participant can be connected to two streams: the audio and video stream and the screen-share stream. If a participant disables audio and video or stops a screen-share presentation, the SDK removes the proper stream and emits the streamRemoved event to all conference participants.

The SDK can also emit the streamRemoved event only for the local participant. When the local participant uses the stopAudio method to locally mute a selected remote participant who does not use a camera, the local participant receives the streamRemoved event.

Note: In Dolby Voice conferences, each conference participant receives only one mixed audio stream from the server. To keep backward compatibility with the customers' implementation, the SDK offers a faked audio track for audio transmission. The faked audio track is included in the streamAdded and streamRemoved events. The SDK takes the audio stream information from the participantAdded and participantUpdated events.

example

VoxeetSDK.conference.on("streamRemoved", (participant, stream) => {});

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The participant whose stream was removed from a conference. |

stream | MediaStreamWithType | The removed media stream. |

Returns: void

streamUpdated

• streamUpdated(participant: Participant, stream: MediaStreamWithType): void

Emitted whenever a participant's media stream is modified. The SDK emits the event to all conference participants in the following situations:

-

A conference participant who is connected to an

audio and videostream changes the stream by enabling a microphone while using a camera or by enabling a camera while using a microphone. -

A conference participant starts sharing their screen with audio enabled. In this situation, the SDK emits the streamAdded and streamUpdated event.

The SDK can also emit the streamUpdated event only for the local participant. When the local participant uses the stopAudio or startAudio method to locally mute or unmute a selected remote participant who uses a camera, the local participant receives the streamUpdated event.

example

VoxeetSDK.conference.on("streamUpdated", (participant, stream) => {

var node = document.getElementById("received_video");

navigator.attachMediaStream(node, stream);

});

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The participant whose stream was updated during a conference. |

stream | MediaStreamWithType | The updated media stream. |

Returns: void

switched

• switched(): void

Emitted when a new participant joins a conference using the same external ID as another participant who has joined this conference earlier. This event may occur when a participant joins the same conference using another browser or device. In such a situation, the SDK removes the participant who has joined the conference earlier.

Returns: void

Accessors

current

• get current(): Conference | null

Returns information about the current conference. Use this accessor if you wish to receive information that is available in the Conference object, such as the conference alias, ID, information if the conference is new, conference parameters, local participant's conference permissions, conference PIN code, or conference status. For example, use the following code to ask about the local participant's conference permissions:

VoxeetSDK.conference.current.permissions

Returns: Conference | null

maxVideoForwarding

• get maxVideoForwarding(): number

Returns the number of video streams that are transmitted to the local user.

Returns: number

participants

• get participants(): Map<string, Participant>

Returns a list of users who are present at a conference. The local participant is also included on the list, even if the local participant is a listener.

Returns: Map<string, Participant>

Methods

audioLevel

▸ audioLevel(participant: Participant, callback: Function): void

Gets a participant's audio level. The method allows getting the audio level of either only the local participant or all participants, depending on the conference type and the codec used:

| Conference type | Codec | Participants whose audio level you can get |

|---|---|---|

| Dolby Voice | DVC | All participants |

| Dolby Voice | Opus | Local participant |

| Non-Dolby Voice | Opus | All participants |

The possible values of the audio level are in a range from 0.0 to 1.0. In SDK 3.10 and later, the audioLevel is reported even when the local participant is on mute. This lets you add a warning message to notify the local participant that their audio is not sent to a conference when speaking.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The conference participant. |

callback | Function | The callback that retrieves the audio level. |

Returns: void

create

▸ create(options: ConferenceOptions): Promise<Conference>

Creates a conference with ConferenceOptions.

Parameters:

| Name | Type | Description |

|---|---|---|

options | ConferenceOptions | The conference options. |

Returns: Promise<Conference>

demo

▸ demo(options?: DemoOptions): Promise

Creates and joins a demo conference.

Example:

const conference = await VoxeetSDK.conference.demo();

Parameters:

| Name | Type | Description |

|---|---|---|

options? | DemoOptions | Additional options for participants joining a demo conference. |

Returns: Promise<Conference>

fetch

▸ fetch(conferenceId: string): Promise<Conference>

Provides a Conference object that allows joining a conference. The returned object is based on the cached data received from the SDK and includes only the conference ID, list of the conference participants, and the conference permissions.

For more information about using the fetch method, see the Conferencing document.

Parameters:

| Name | Type | Description |

|---|---|---|

conferenceId | string | The conference ID. |

Returns: Promise<Conference>

isMuted

▸ isMuted(): Boolean

Gets the current mute state of the local participant.

Returns: Boolean

isSpeaking

▸ isSpeaking(participant: Participant, callback: Function): any

Gets the participant's current speaking status for an active talker indicator and returns true if the audioLevel is greater than 0.2. This method must be called repeatedly during a conference.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The conference participant. |

callback | Function | The callback that accepts a boolean value indicating the participant's current speaking status. If the boolean value is true, the callback can mark the participant as an active speaker in the application UI. |

Returns: any

join

▸ join(conference: Conference, options: JoinOptions): Promise<Conference>

Joins the conference.

Note: Participants who use Apple Mac OS and the Safari browser to join conferences may experience problems with distorted audio. To solve the problem, we recommend using the latest version of Safari.

Note: Due to a known Firefox issue, a user who has never permitted Firefox to use a microphone and camera cannot join a conference as a listener. If you want to join a conference as a listener using the Firefox browser, make sure that Firefox has permission to use your camera and microphone. To check the permissions, follow these steps:

1. Select the lock icon in the address bar.

2. Select the right arrow placed next to Connection Secure.

3. Select More information.

4. Go to the Permissions tab.

5. Look for the Use the camera and Use the microphone permission and select the Allow option.

Note

If you use SDK 3.7 or later and use two different URLs for serving your application and hosting the SDK through a Content Delivery Network (CDN), you must enable cross-origin resource sharing (CORS) to join a conference using the Dolby Voice Codec. To enable CORS, see the Install the SDK instruction.

example

// For example

const constraints = {

audio: true,

video: {

width: {

min: "320",

max: "1280",

},

height: {

min: "240",

max: "720",

},

},

};

// A simplest example of constraints would be:

const constraints = { audio: true, video: true };

VoxeetSDK.conference

.join(conference, { constraints: constraints })

.then((info) => {})

.catch((error) => {});

Parameters:

| Name | Type | Description |

|---|---|---|

conference | Conference | The conference object. |

options | JoinOptions | The additional options for the joining participant. |

Returns: Promise<Conference>

kick

▸ kick(participant: Participant): Promise<any>

Allows the conference owner, or a participant with adequate permissions, to kick another participant from the conference by revoking the conference access token. The kicked participant cannot join the conference again.

VoxeetSDK.conference.kick(participant);

This method is not available for Real-time Streaming viewers and triggers the UnsupportedError.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The participant who needs to be kicked from the conference. |

Returns: Promise<any>

leave

▸ leave(): Promise<void>

Leaves a conference. This method triggers receiving the streamRemoved events from all participants and the stopped events from the FilePresentationService and the VideoPresentationService to clean up UI resources for the local participant.

Returns: Promise<void>

listen

▸ listen(conference: Conference, options?: ListenOptions): Promise<Conference>

Joins a conference as a listener. You can choose to either join, replay, or listen to a conference. The listen method connects to the conference in the receiving only mode which does not allow transmitting video or audio.

Note: Conference events from other listeners are not available for listeners. Only users will receive conference events from other listeners.

Parameters:

| Name | Type | Description |

|---|---|---|

conference | Conference | The conference object. |

options? | ListenOptions | The additional options for the joining listener. |

Returns: Promise<Conference>

localStats

▸ localStats(): WebRTCStats

Provides standard WebRTC statistics for the application. Based on the WebRTC statistics, the SDK computes audio and video statistics. Calling this function at a higher frequency than 2Hz will have no effect.

Returns: WebRTCStats

mute

▸ mute(participant: Participant, isMuted: boolean): void

Stops playing the specified remote participants' audio to the local participant or stops playing the local participant's audio to the conference. The mute method does not notify the server to stop audio stream transmission. To stop sending an audio stream to the server or to stop receiving an audio stream from the server, use the stopAudio method.

The mute method depends on the Dolby Voice usage:

- In conferences where Dolby Voice is not enabled, conference participants can mute themselves or remote participants.

- In conferences where Dolby Voice is enabled, conference participants can only mute themselves.

If you wish to mute remote participants in Dolby Voice conferences, you must use the stop API. This API allows the conference participants to stop receiving the specific audio streams from the server.

Note: Due to a Safari issue, participants who join a conference using Safari and start receiving the empty frames can experience a Safari crash. This problem does not occur during Dolby Voice conferences.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The local or remote conference participant. |

isMuted | boolean | The mute state, true indicates that a participant is muted, false indicates that a participant is not muted. |

Returns: void

muteOutput

▸ muteOutput(isMuted: boolean): void

Mutes all remote participants in a conference. This method only stops playing participants' audio to the local participant; it not notify the server to stop audio stream transmission. This method is supported in SDK 3.10 and later.

Parameters

| Name | Type | Description |

|---|---|---|

isMuted | boolean | A boolean, true indicates that remote participants are muted, false indicates that remote participants are not muted. |

Returns: void

playBlockedAudio

▸ playBlockedAudio(): void

Allows a specific participant to play audio that is blocked by the browser's auto-play policy.

Returns: void

replay

▸ replay(conference: Conference, replayOptions?: ReplayOptions, mixingOptions?: MixingOptions): Promise<Conference>

Replays a previously recorded conference. For more information, see the Recording Conferences article.

Parameters:

| Name | Type | Default value | Description |

|---|---|---|---|

conference | Conference | - | The conference object. |

replayOptions | ReplayOptions | { offset: 0 } | The replay options. |

mixingOptions? | MixingOptions | - | The model that notifies the server that a participant who replays the conference is a special participant called Mixer. |

Returns: Promise<Conference>

setSpatialDirection

▸ setSpatialDirection(participant: Participant, direction: SpatialDirection): void

Sets the direction the local participant is facing in space. This method is available only for participants who joined the conference using the join method with the spatialAudio parameter enabled. Otherwise, SDK triggers the UnsupportedError error. To set a spatial direction for listeners, use the Set Spatial Listeners Audio REST API.

If the local participant hears audio from the position (0,0,0) facing down the Z-axis and locates a remote participant in the position (1,0,1), the local participant hears the remote participant from their front-right. If the local participant chooses to change the direction they are facing and rotate +90 degrees about the Y-axis, then instead of hearing the speaker from the front-right position, they hear the speaker from the front-left position. The following video presents this example:

For more information, see the SpatialDirection model.

If sending the updated positions to the server fails, the SDK generates the ConferenceService event error that includes SpatialAudioError.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The local participant. |

direction | SpatialDirection | The direction the participant is facing in space. |

Returns: void

setSpatialEnvironment

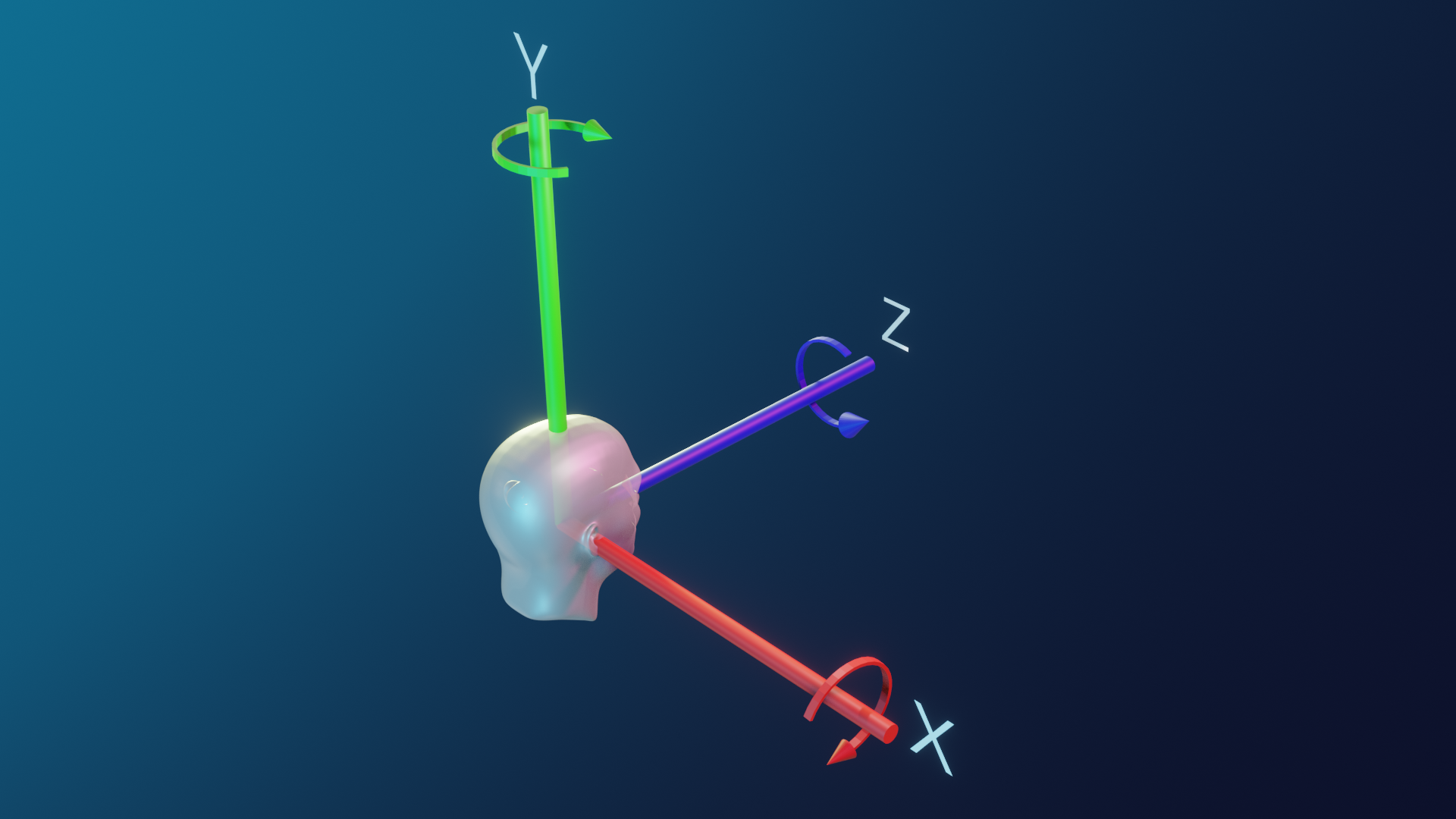

▸ setSpatialEnvironment(scale: SpatialScale, forward: SpatialPosition, up: SpatialPosition, right: SpatialPosition): void

Configures a spatial environment of an application, so the audio renderer understands which directions the application considers forward, up, and right and which units it uses for distance.

This method is available only for participants who joined a conference using the join method with the spatialAudio parameter enabled. Otherwise, SDK triggers the UnsupportedError error. To set a spatial environment for listeners, use the Set Spatial Listeners Audio REST API.

If not called, the SDK uses the default spatial environment, which consists of the following values:

forward= (0, 0, 1), where +Z axis is in frontup= (0, 1, 0), where +Y axis is aboveright= (1, 0, 0), where +X axis is to the rightscale= (1, 1, 1), where one unit on any axis is 1 meter

The default spatial environment is presented in the following diagram:

If sending the updated positions to the server fails, the SDK generates the ConferenceService event error that includes SpatialAudioError.

Parameters:

| Name | Type | Description |

|---|---|---|

scale | SpatialScale | A scale that defines how to convert units from the coordinate system of an application (pixels or centimeters) into meters used by the spatial audio coordinate system. For example, if SpatialScale is set to (100,100,100), it indicates that 100 of the applications units (cm) map to 1 meter for the audio coordinates. In such a case, if the listener's location is (0,0,0)cm and a remote participant's location is (200,200,200)cm, the listener has an impression of hearing the remote participant from the (2,2,2)m location. The scale value must be greater than 0. Otherwise, SDK emits ParameterError. For more information, see the Spatial Chat article. |

forward | SpatialPosition | A vector describing the direction the application considers as forward. The value can be either +1, 0, or -1 and must be orthogonal to up and right. Otherwise, SDK emits ParameterError. |

up | SpatialPosition | A vector describing the direction the application considers as up. The value can be either +1, 0, or -1 and must be orthogonal to forward and right. Otherwise, SDK emits ParameterError. |

right | SpatialPosition | A vector describing the direction the application considers as right. The value can be either +1, 0, or -1 and must be orthogonal to forward and up. Otherwise, SDK emits ParameterError. |

Returns: void

setSpatialPosition

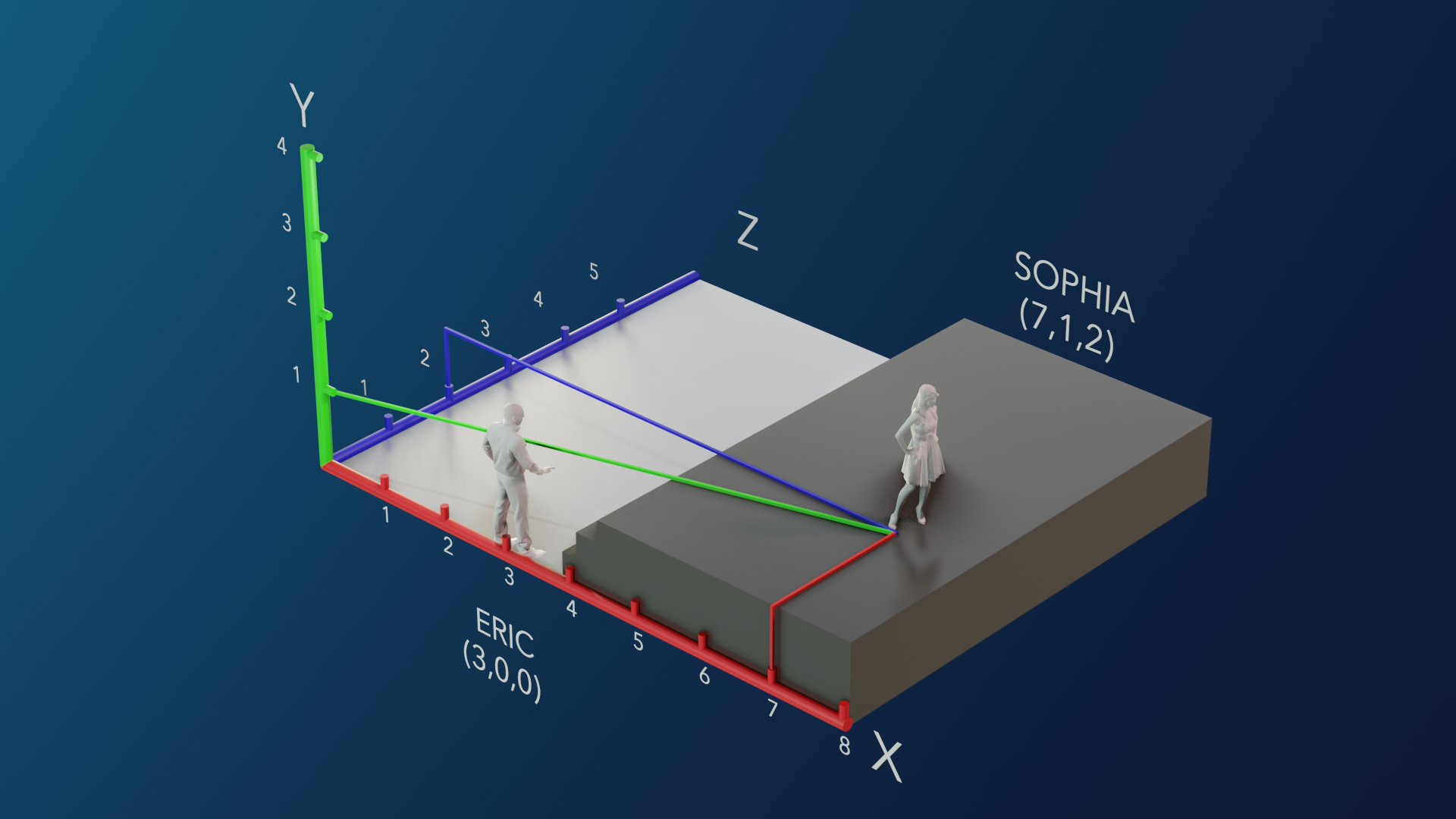

▸ setSpatialPosition(participant: Participant, position: SpatialPosition): void

Sets a participant's position in space to enable the spatial audio experience during a Dolby Voice conference. This method is available only for participants who joined the conference using the join method with the spatialAudio parameter enabled. Otherwise, SDK triggers the UnsupportedError error. To set a spatial position for listeners, use the Set Spatial Listeners Audio REST API.

Depending on the specified participant in the participant parameter, the setSpatialPosition method impacts the location from which audio is heard or from which audio is rendered:

-

When the specified participant is the local participant, setSpatialPosition sets a location from which the local participant listens to a conference. If the local participant does not have an established location, the participant hears audio from the default location (0, 0, 0).

-

When the specified participant is a remote participant, setSpatialPosition ensures the remote participant's audio is rendered from the specified location in space. Setting the remote participants’ positions is required in conferences that use the individual spatial audio style. In these conferences, if a remote participant does not have an established location, the participant does not have a default position and will remain muted until a position is specified. The shared spatial audio style does not support setting the remote participants' positions. In conferences that use the shared style, the spatial scene is shared by all participants, so that each client can set a position and participate in the shared scene.

For example, if a local participant Eric, who uses the individual spatial audio style and does not have a set direction, calls setSpatialPosition(VoxeetSDK.session.participant, {x:3,y:0,z:0}), Eric hears audio from the position (3,0,0). If Eric also calls setSpatialPosition(Sophia, {x:7,y:1,z:2}), he hears Sophia from the position (7,1,2). In this case, Eric hears Sophia 4 meters to the right, 1 meter above, and 2 meters in front. The following graphic presents the participants' locations:

If sending the updated positions to the server fails, the SDK generates the ConferenceService event error that includes SpatialAudioError.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | Participant | The selected participant. Using the local participant sets the location from which the participant will hear a conference. Using a remote participant sets the position from which the participant's audio will be rendered. |

position | SpatialPosition | The participant's audio location. |

Returns: void

simulcast

▸ simulcast(requested: Array<ParticipantQuality>): Promise<any>

Sets the quality of the received Simulcast streams. For more information, see the Simulcast guide.

Parameters:

| Name | Type | Description |

|---|---|---|

requested | Array<ParticipantQuality> | An array that includes the streams qualities for specific conference participants. |

Returns: Promise<any>

startScreenShare

▸ startScreenShare(sourceId: any): any

Deprecated

This method is deprecated in SDK 3.9 and replaced with a new startScreenShare method.

Starts a screen-sharing session. This method is not available on mobile browsers; participants who join a conference using a mobile browser cannot share a screen.

example

VoxeetSDK.conference

.startScreenShare()

.then(() => {})

.catch((e) => {});

Parameters:

| Name | Type | Description |

|---|---|---|

sourceId | any | The device ID. If you use multiple screens, use this parameter to specify which screen you want to share. |

Returns: any

startScreenShare

▸ startScreenShare(options: ScreenshareOptions)

Starts sharing the local participant's screen. This method is not available on mobile browsers; participants who join a conference using a mobile browser cannot share their screens. The method is available in SDK 3.9 and later and is not supported for listeners. Calling this method by a listener results in the UnsupportedError.

The SDK 3.9 and earlier support sharing only one screen per conference. The SDK 3.10 and later allow the sharing two screens in one conference, so two participants can share their screens at the same time.

By default, the method supports sending the computer's audio to remote participants while sharing a screen. However, this functionality is enabled and supported only on Chrome and Edge for users who use the Opus codec. On Windows, the method allows sending the system's audio. On macOS, the method allows sending audio only from a browser tab. This functionality is not supported for any other SDK, which means that participants who use, for example, the iOS SDK cannot hear the shared audio. In case of experiencing audio issues while sharing system audio, see the Troubleshooting guide.

Parameters:

| Name | Type | Description |

|---|---|---|

options | ScreenshareOptions | Options that allow you to select a screen, send the computer's audio, and modify parameters of the shared screen. |

Returns: any

stopScreenShare

▸ stopScreenShare(): Promise<any>

Stops the screen-sharing session. This method is not available for listeners. Calling this method by a listener results in the UnsupportedError.

Returns: Promise<any>

updatePermissions

▸ updatePermissions(participantPermissions: Array<ParticipantPermissions>): Promise<any>

Updates the participant's conference permissions. If a participant does not have permission to perform a specific action, this action is not available for this participant during a conference, and the participant receives InsufficientPermissionsError. If a participant started a specific action and then lost permission to perform this action, the SDK stops the blocked action. For example, if a participant started sharing a screen and received the updated permissions that do not allow him to share a screen, the SDK stops the screen sharing session and the participant cannot start sharing the screen again.

VoxeetSDK.conference.updatePermissions(participantPermissions: Array<ParticipantPermissions>)

Parameters:

| Name | Type | Description |

|---|---|---|

participantPermissions | Array<ParticipantPermissions> | The updated participant's permissions. |

Returns: Promise<any>

videoForwarding

▸ videoForwarding(options: VideoForwardingOptions>): Promise<void>

Sets the video forwarding functionality for the local participant. The method allows:

- Setting the maximum number of video streams that may be transmitted to the local participant

- Prioritizing specific participants' video streams that need to be transmitted to the local participant

- Changing the video forwarding strategy that defines how the SDK should select conference participants whose videos will be received by the local participant

This method is available only in SDK 3.6 and later and is not available for Real-time Streaming viewers. Calling this method by an RTS viewer triggers the UnsupportedError.

Parameters:

| Name | Type | Description |

|---|---|---|

options | VideoForwardingOptions | The video forwarding options. |

Returns: Promise<void>

Updated 12 months ago