ConferenceService

The ConferenceService allows an application to manage a conference life cycle and interact with the conference. Using the service, you can create, join, and leave conferences.

For more information about creating and joining conferences, see the Basic Application Concepts guides.

Events

participantAdded

▸ participantAdded(participant: VTParticipant)

Emitted when a new participant is invited to a conference. The SDK does not emit the participantAdded event for the local participant. Listeners only receive the participantAdded events about users; they do not receive events for other listeners. Users receive the participantAdded events about users and do not receive any events about listeners. To notify all application users about the number of participants who are present at a conference, use the activeParticipants event.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | VTParticipant | The invited participant who is added to a conference. |

participantUpdated

▸ participantUpdated(participant: VTParticipant)

Emitted when a participant changes status. Listeners only receive the participantUpdated events about users; they do not receive events for other listeners. Users receive the participantUpdated events about users and do not receive any events about listeners. To notify all application users about the number of participants who are present at a conference, use the activeParticipants event.

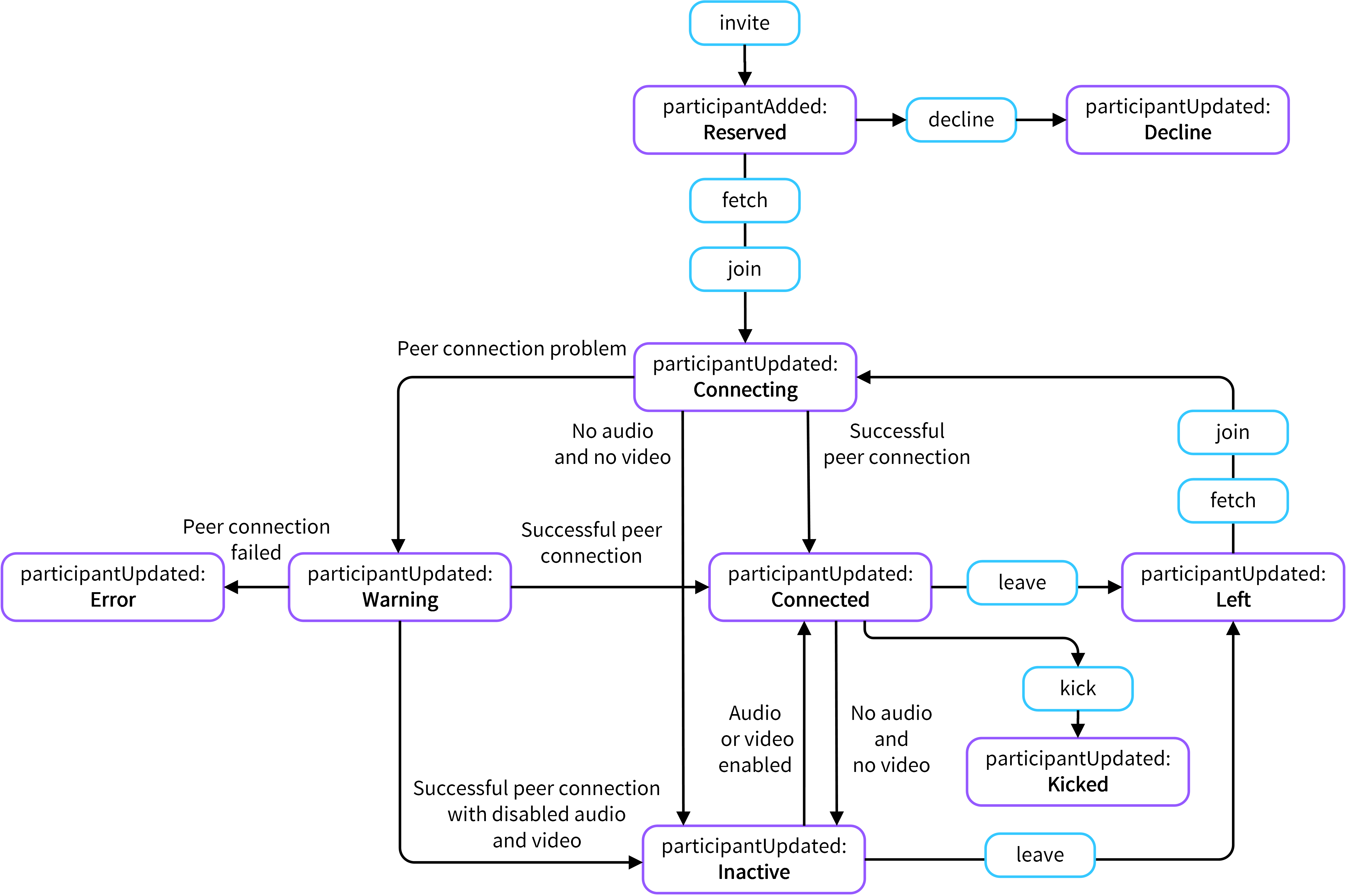

The following graphic shows possible status changes during a conference:

Diagram that presents the possible status changes

Parameters:

| Name | Type | Description |

|---|---|---|

participant | VTParticipant | The conference participant who changed status. |

permissionsUpdated

▸ permissionsUpdated(permissions: [Int])

Emitted when the local participant's permissions are updated.

Parameters:

| Name | Type | Description |

|---|---|---|

permissions | [Int] | The updated conference permissions. |

statusUpdated

▸ statusUpdated(status: VTConferenceStatus)

Emitted when the conference status is updated.

Parameters:

| Name | Type | Description |

|---|---|---|

status | VTConferenceStatus | The updated conference status. |

streamAdded

▸ streamAdded(participant: VTParticipant, stream: MediaStream)

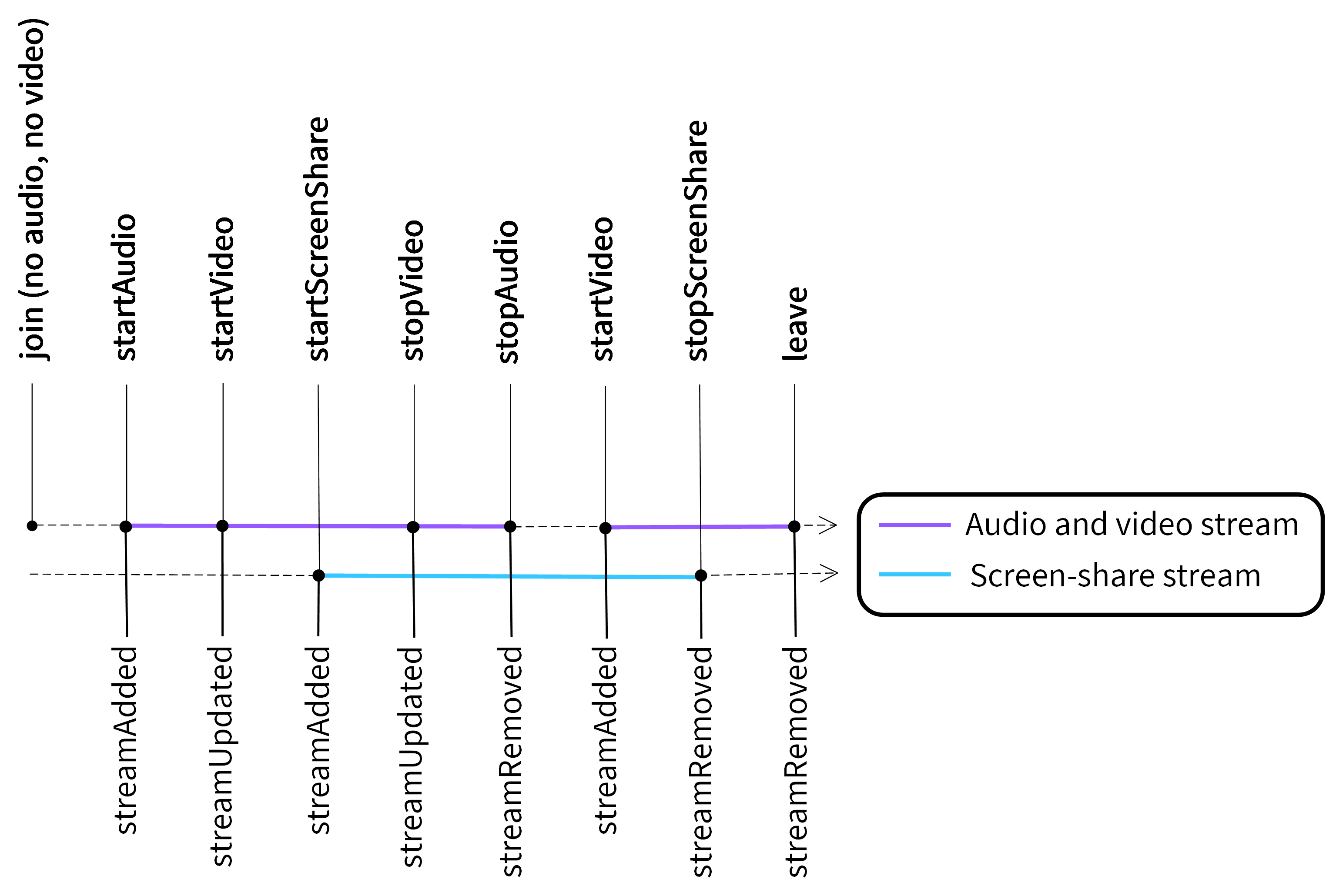

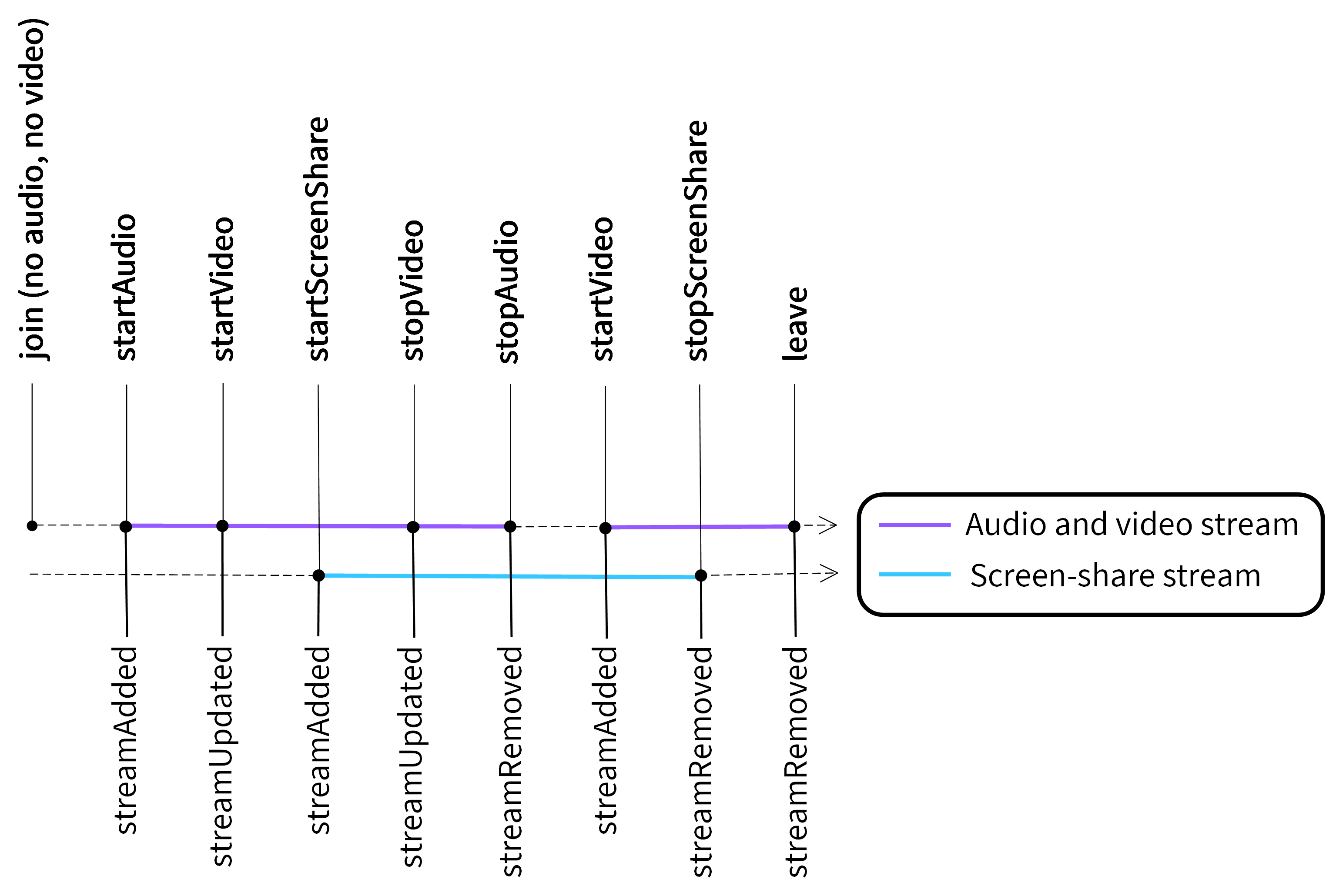

Emitted when the SDK adds a new stream to a conference participant. Each conference participant can be connected to two streams: the audio and video stream and the screen-share stream. If a participant enables audio or video, the SDK adds the audio and video stream to the participant and emits the streamAdded event to all participants. When a participant is connected to the audio and video stream and changes the stream, for example, enables a camera while using a microphone, the SDK updates the audio and video stream and emits the streamUpdated event. When a participant starts sharing a screen, the SDK adds the screen-share stream to this participants and emits the streamAdded event to all participants. The following graphic shows this behavior:

The difference between the streamAdded and streamUpdated events

When a new participant joins a conference with enabled audio and video, the SDK emits the streamAdded event that includes audio and video tracks.

The SDK can also emit the streamAdded event only for the local participant. When the local participant uses the stopAudio method to locally mute the selected remote participant who does not use a camera, the local participant receives the streamRemoved event. After using the startAudio method for this remote participant, the local participant receives the streamAdded event.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | VTParticipant | The participant whose stream was added to a conference. |

stream | MediaStream | The added media stream. |

streamUpdated

▸ streamUpdated(participant: VTParticipant, stream: MediaStream)

Emitted when a conference participant who is connected to the audio and video stream changes the stream by enabling a microphone while using a camera or by enabling a camera while using a microphone. The event is emitted to all conference participants. The following graphic shows this behavior:

The difference between the streamAdded and streamUpdated events

The SDK can also emit the streamUpdated event only for the local participant. When the local participant uses the stopAudio or startAudio method to locally mute or unmute a selected remote participant who uses a camera, the local participant receives the streamUpdated event.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | VTParticipant | The participant whose stream was updated during a conference. |

stream | MediaStream | The updated media stream. |

streamRemoved

▸ streamRemoved(participant: VTParticipant, stream: MediaStream)

Emitted when the SDK removes a stream from a conference participant. Each conference participant can be connected to two streams: the audio and video stream and the screen-share stream. If a participant disables audio and video or stops a screen-share presentation, the SDK removes the proper stream and emits the streamRemoved event to all conference participants.

The SDK can also emit the streamRemoved event only for the local participant. When the local participant uses the stopAudio method to locally mute a selected remote participant who does not use a camera, the local participant receives the streamRemoved event.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | VTParticipant | The participant whose stream was removed from a conference. |

stream | MediaStream | The removed media stream. |

Accessors

current

▸ current: VTConference?

Returns information about the current conference. Use this accessor if you wish to receive information that is available in the VTConference object, such as the conference alias, ID, information if the conference is new, the list of the conference participants, conference parameters, local participant's conference permissions, or conference status.

Returns: VTConference?

delegate

▸ delegate: VTConferenceDelegate

Delegate, a means of communication between objects in the conference service.

Returns: VTConferenceDelegate

defaultBuiltInSpeaker

▸ defaultBuiltInSpeaker: Bool

A boolean that sets a default built-in device that should be used in a conference, either a built-in speaker (true) or a built-in receiver (false). By default, defaultBuiltInSpeaker is set to true.

Returns: Bool

defaultVideo

▸ defaultVideo: Bool

A boolean that is responsible for a default camera setting. When set to false, all participants join a conference without video. When set to true, the SDK enables participants' cameras when they join a conference. By default, defaultVideo is set to false.

Returns: Bool

maxVideoForwarding

▸ maxVideoForwarding: Int

Gets the maximum number of video streams that may be transmitted to the local participant.

Returns: Int

videoForwardingStrategy

▸ videoForwardingStrategy(): VideoForwardingStrategy

Gets the video forwarding strategy that the local participant uses in the current conference. This method is available only in SDK 3.6 and later.

Returns: VideoForwardingStrategy

Methods

audioLevel

▸ audioLevel(participant: VTParticipant): Double

Gets the participant's audio level. The audio level value ranges from 0.0 to 1.0.

Note: When the local participant is muted, the audioLevel value is set to a non-zero value, and isSpeaking is set to true if the audioLevel is greater than 0.05. This implementation allows adding a warning message to notify the local participant that their audio is not sent to a conference. However, when the push notification type is set to callKit, the mute method uses the CallKit mute action to mute the input audio source. In this case, the SDK does not receive any audio samples and does not return values that let you detect when the local participant starts talking while muted.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | VTParticipant | The conference participant. |

Returns: Double

create

▸ create(options: VTConferenceOptions?, success: (( conference: VTConference) -> Void)?, fail: (( error: NSError) -> Void)?)

Creates a conference.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

options | VTConferenceOptions? | nil | The conference options. |

success | ((_ conference: VTConference) -> Void)? | nil | The block to execute when the operation is successful. |

fail | ((_ error: NSError) -> Void)? | nil | The error to trigger when the operation fails. |

demo

▸ demo(spatialAudio: boolean, completion: ((_ error: NSError?) -> Void)? = nil)

Creates a demo conference.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

spatialAudio | boolean | false | Enables and disables spatial audio in a demo conference. |

completion | ((_ error: NSError) -> Void)? | nil | The block to execute when the query completes. |

fetch

▸ fetch(conferenceID: String, completion: (VTConference) -> Void)

Provides the conference object that allows joining a conference. For more information about using the fetch method, see the Conferencing document.

Parameters:

| Name | Type | Description |

|---|---|---|

conferenceID | String | The conference ID. |

completion | (VTConference) -> Void | The block to execute when the query completes. |

isSpeaking

▸ isSpeaking(participant: VTParticipant) : Bool

Gets the participant's current speaking status.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | VTParticipant | The conference participant. |

Returns: Bool

isMuted

▸ isMuted() -> Bool

Informs whether a participant is muted.

Note: This API is no longer supported for remote participants.

Returns: Bool

join

▸ join(conference: VTConference, options: VTJoinOptions?, success: (( conference: VTConference) -> Void)?, fail: (( error: NSError) -> Void)?)

Joins a conference. For more information about joining conferences, see the Conferencing document.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

conference | VTConference | - | The conference object. |

options | VTJoinOptions? | nil | The additional options for the joining participant. |

success | ((_ conference: VTConference) -> Void)? | nil | The block to execute when the operation is successful. |

fail | ((_ error: NSError) -> Void)? | nil | The error to trigger when the operation fails. |

kick

▸ kick(participant: VTParticipant, completion: ((_ error: NSError) -> Void)?)

Allows the conference owner, or a participant with adequate permissions, to kick another participant from the conference by revoking the conference access token. The kicked participant cannot join the conference again. This method is not available for mixed listeners.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

participant | VTParticipant | - | The participant who needs to be kicked from the conference. |

completion | ((_ error: NSError) -> Void)? | nil | The block to execute when the query completes. |

leave

▸ leave(completion: ((_ error: NSError?) -> Void)?)

Leaves the conference.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

completion | ((_ error: NSError?) -> Void)? | nil | The block to execute when the query completes. |

listen

▸ listen(conference: VTConference, options: VTListenOptions?, success: (( conference: VTConference) -> Void)?, fail: (( error: NSError) -> Void)?)

Joins a conference as a listener.

Note: Conference events from other listeners are not available for listeners. Only users will receive conference events from other listeners.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

conference | VTConference | - | The conference object. |

success | ((_ conference: VTConference) -> Void)? | nil | The block to execute when the operation is successful. |

fail | ((_ error: NSError) -> Void)? | nil | The error to trigger when the operation fails. |

localStats

▸ localStats(): [[String: Any]]?

Provides the WebRTC statistics.

Returns: [[String: Any]]?

mute

▸ mute(participant: VTParticipant, isMuted: boolean, completion: ((_ error: NSError?) -> Void)? = nil

Stops playing the specified remote participants' audio to the local participant or stops playing the local participant's audio to the conference. The mute method does not notify the server to stop audio stream transmission. To stop sending an audio stream to the server or to stop receiving an audio stream from the server, use the stopAudio method.

Note: The mute method depends on the Dolby Voice usage and the set push notification type:

- In conferences where Dolby Voice is not enabled, conference participants can mute themselves or remote participants.

- In conferences where Dolby Voice is enabled, conference participants can only mute themselves.

- When the push notification type is set to callKit, the mute method uses the CallKit mute action to mute the input audio source. In this case, the SDK does not receive any audio samples and does not return values that let you detect when the local participant starts talking while muted.

- When the push notification type is set to none, the mute method uses software mute, so the SDK can still receive audio samples. In this case, isSpeaking and audioLevel still return values that let you detect when the local participant starts talking while muted.

If you wish to mute remote participants in Dolby Voice conferences, you must use the stopAudio API. This API allows the conference participants to stop receiving the specific audio streams from the server.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

isMuted | boolean | - | The mute state, true indicates that a participant is muted, false indicates that a participant is not muted. |

completion | ((_ error: NSError?) -> Void)? | nil | The block to execute when the query completes. |

muteOutput

▸ muteOutput(isMuted: boolean, completion: ((_ error: NSError?) -> Void)?)

Controls playing remote participants' audio to the local participant.

Note: This API is only supported when the client connects to a Dolby Voice conference.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

isMuted | boolean | - | The mute state. True indicates that the local participant's application does not play the remote participants' audio, false indicates that the local participant's application plays the remote participants' audio. |

completion | ((_ error: NSError?) -> Void)? | nil | The block to execute when the query completes. |

replay

▸ replay(conference: VTConference, options: VTReplayOptions?, completion: ((_ error: NSError?) -> Void)?)

Replays the recorded conference.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

conference | VTConference | - | The conference object. |

options | VTReplayOptions? | nil | The parameters responsible for replaying conferences. |

completion | ((_ error: NSError?) -> Void)? | nil | The block to execute when the query completes. |

setSpatialDirection

▸ setSpatialDirection(participant: VTParticipant, direction: VTSpatialDirection, completion: ((_ error: NSError?) -> Void)? = nil)

Sets the direction the local participant is facing in space. This method is available only for participants who joined the conference using the join method with the spatialAudio parameter enabled. Otherwise, SDK triggers the spatialAudio error. To set a spatial direction for listeners, use the Set Spatial Listeners Audio REST API.

If the local participant hears audio from the position (0,0,0) facing down the Z-axis and locates a remote participant in the position (1,0,1), the local participant hears the remote participant from their front-right. If the local participant chooses to change the direction they are facing and rotate +90 degrees about the Y-axis, then instead of hearing the speaker from the front-right position, they hear the speaker from the front-left position. The following video presents this example:

For more information, see the VTSpatialDirection model.

Parameters:

| Name | Type | Description |

|---|---|---|

participant | VTParticipant | The local participant. |

direction | VTSpatialDirection | The direction the participant is facing in space. |

completion | ((_ error: NSError?) -> Void)? | The block to execute when the query completes. |

setSpatialEnvironment

▸ setSpatialEnvironment(scale: VTSpatialScale, forward: VTSpatialPosition, up: VTSpatialPosition, right: VTSpatialPosition, completion: ((_ error: NSError?) -> Void)? = nil)

Configures a spatial environment of an application, so the audio renderer understands which directions the application considers forward, up, and right and which units it uses for distance.

This method is available only for participants who using the join method with the spatialAudio parameter enabled. Otherwise, SDK triggers the spatialAudio error. To set a spatial environment for listeners, use the Set Spatial Listeners Audio REST API.

If not called, the SDK uses the default spatial environment, which consists of the following values:

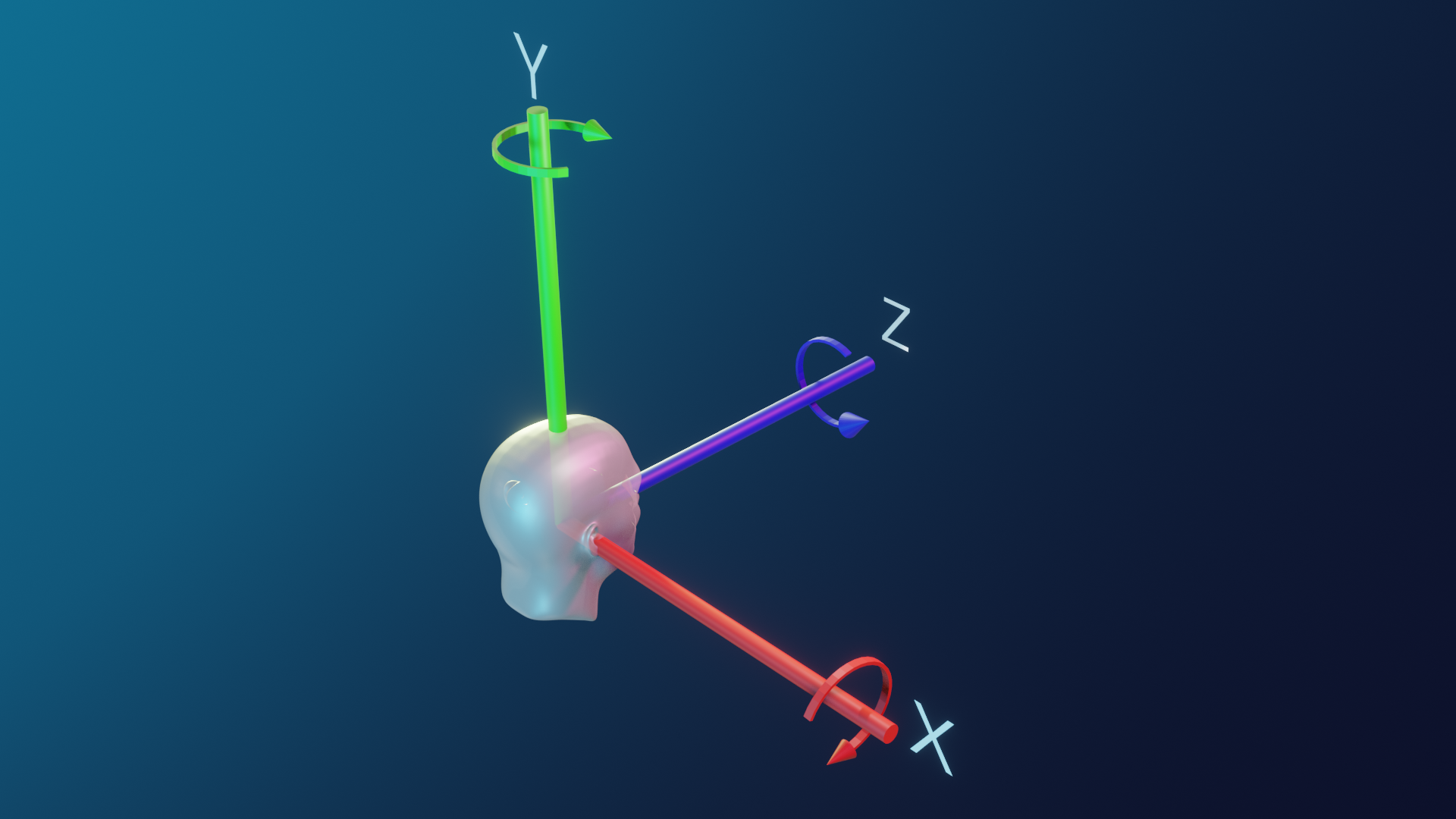

forward= (0, 0, 1), where +Z axis is in frontup= (0, 1, 0), where +Y axis is aboveright= (1, 0, 0), where +X axis is to the rightscale= (1, 1, 1), where one unit on any axis is 1 meter

The default spatial environment is presented in the following diagram:

Parameters:

| Name | Type | Description |

|---|---|---|

scale | VTSpatialScale | A scale that defines how to convert units from the coordinate system of an application (pixels or centimeters) into meters used by the spatial audio coordinate system. For example, if SpatialScale is set to (100,100,100), it indicates that 100 of the applications units (cm) map to 1 meter for the audio coordinates. In such a case, if the listener's location is (0,0,0)cm and a remote participant's location is (200,200,200)cm, the listener has an impression of hearing the remote participant from the (2,2,2)m location. The scale value must be greater than 0. For more information, see the Spatial Chat article. |

forward | VTSpatialPosition | A vector describing the direction the application considers as forward. The value must be orthogonal to up and right. |

up | VTSpatialPosition | A vector describing the direction the application considers as up. The value must be orthogonal to forward and right. |

right | VTSpatialPosition | A vector describing the direction the application considers as right. The value must be orthogonal to forward and up. |

completion | ((_ error: NSError?) -> Void)? | The block to execute when the query completes. |

setSpatialPosition

▸ setSpatialPosition(participant: VTParticipant, position: VTSpatialPosition, completion: ((_ error: NSError?) -> Void)? = nil)

Sets a participant's position in space to enable the spatial audio experience during a Dolby Voice conference. This method is available only for participants who joined the conference using the join method with the spatialAudio parameter enabled. Otherwise, SDK triggers the spatialAudio error. To set a spatial position for listeners, use the Set Spatial Listeners Audio REST API.

Depending on the specified participant in the participant parameter, the setSpatialPosition method impacts the location from which audio is heard or from which audio is rendered:

-

When the specified participant is the local participant, setSpatialPosition sets a location from which the local participant listens to a conference. If the local participant does not have an established location, the participant hears audio from the default location (0, 0, 0).

-

When the specified participant is a remote participant, setSpatialPosition ensures the remote participant's audio is rendered from the specified location in space. Setting the remote participants’ positions is required in conferences that use the individual spatial audio style. In these conferences, if a remote participant does not have an established location, the participant does not have a default position and will remain muted until a position is specified. The shared spatial audio style does not support setting the remote participants' positions. In conferences that use the shared style, the spatial scene is shared by all participants, so that each client can set a position and participate in the shared scene. Calling setSpatialPosition for remote participants in the shared spatial audio style triggers the spatialAudio error.

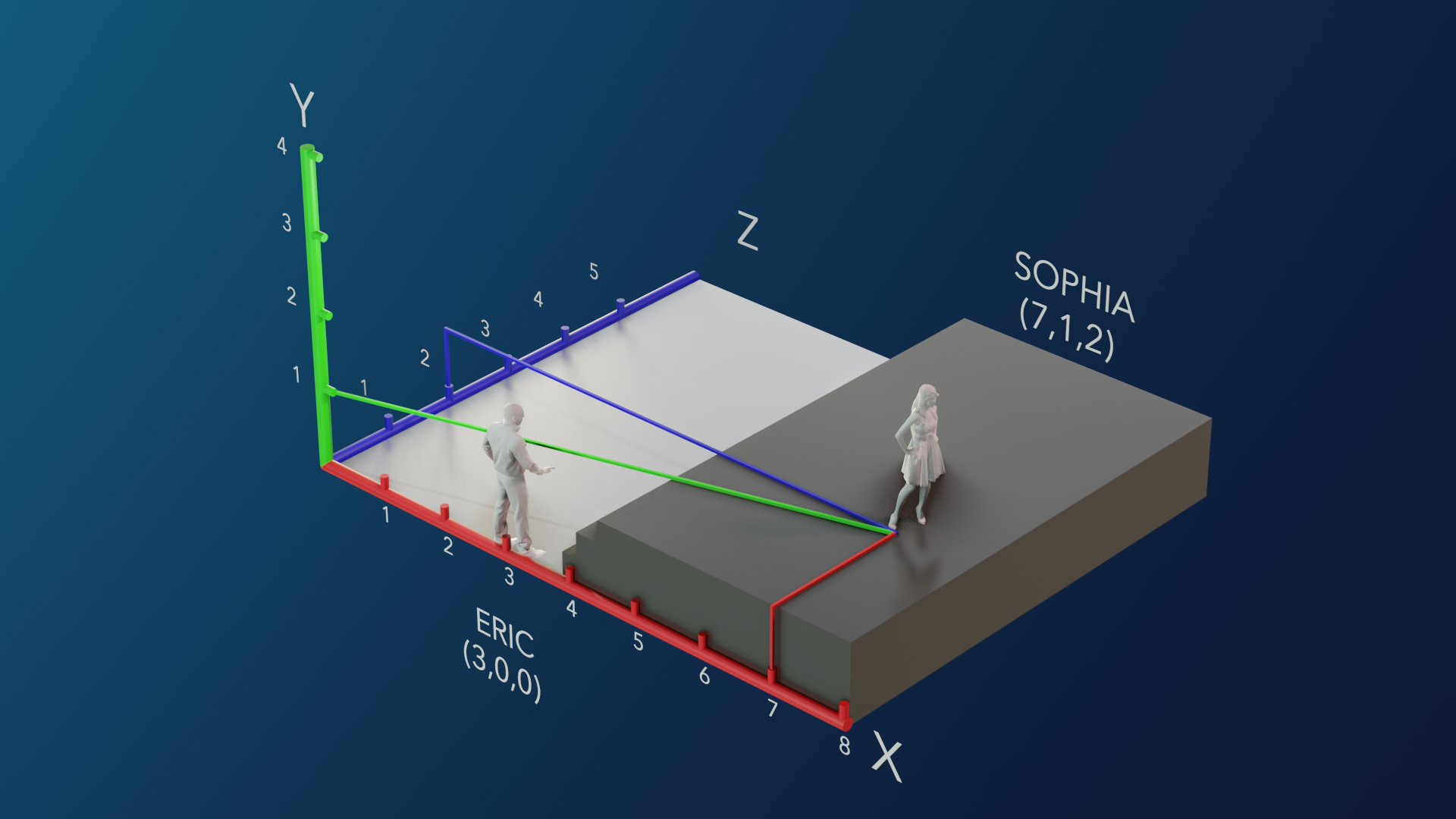

For example, if a local participant Eric, who uses the individual spatial audio style and does not have a set direction, calls setSpatialPosition(VoxeetSDK.session.participant, {x:3,y:0,z:0}), Eric hears audio from the position (3,0,0). If Eric also calls setSpatialPosition(Sophia, {x:7,y:1,z:2}), he hears Sophia from the position (7,1,2). In this case, Eric hears Sophia 4 meters to the right, 1 meter above, and 2 meters in front. The following graphic presents the participants' locations:

Parameters:

| Name | Type | Description |

|---|---|---|

participant | VTParticipant | The selected participant. Using the local participant sets the location from which the participant will hear a conference. Using a remote participant sets the position from which the participant's audio will be rendered. |

position | VTSpatialPosition | The participant's audio location. |

completion | ((_ error: NSError?) -> Void)? | The block to execute when the query completes. |

startScreenShare

▸ startScreenShare(broadcast: boolean, completion: ((_ error: NSError?) -> Void)?)

Starts sharing the local participant's screen. The ScreenShare with iOS document describes how to set up screen-share outside the application. The method is available only to participants who joined a conference using the join method; it is not available for listeners.

The SDK 3.10 and earlier support sharing only one screen per conference. The SDK 3.11 and later allow sharing two screens in one conference, so two participants can share their screens at the same time.

| Name | Type | Default | Description |

|---|---|---|---|

broadcast | boolean | false | A boolean that specifies whether the application should share the screen only inside the application (false) or should share the whole screen, even when the application is enabled in the background (true). |

completion | ((_ error: NSError?) -> Void)? | nil | The block to execute when the query completes. |

simulcast

▸ simulcast(requested: [VTParticipantQuality], completion: ((_ error: NSError?) -> Void)?)

Requests a specific quality of the received Simulcast video streams. You can use this method for selected conference participants or for all participants who are present in a conference. For more information, see the Simulcast guide.

This method is not supported for mixed listeners.

| Name | Type | Default | Description |

|---|---|---|---|

requested | [VTParticipantQuality] | - | The requested quality of the Simulcast video streams. |

completion | ((_ error: NSError?) -> Void)? | nil | The block to execute when the query completes. |

stopScreenShare

▸ stopScreenShare(completion: ((_ error: NSError?) -> Void)?)

Stops a screen-sharing session. The method is available only to participants who joined a conference using the join method; it is not available for listeners.

| Name | Type | Default | Description |

|---|---|---|---|

completion | ((_ error: NSError?) -> Void)? | nil | The block to execute when the query completes. |

updatePermissions

▸ updatePermissions(participantPermissions: VTParticipantPemissions, completion: ((_ error: NSError) -> Void)?)

Updates the participant's conference permissions. If a participant does not have permission to perform a specific action, this action is not available for this participant during a conference. If a participant started a specific action and then lost permission to perform this action, the SDK stops the blocked action. For example, if a participant started sharing a screen and received the updated permissions that do not allow him to share a screen, the SDK stops the screen sharing session and the participant cannot start sharing the screen again.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

participantPermissions | VTParticipantPermissions | - | The updated participant's permissions. |

completion | ((_ error: NSError) -> Void)? | nil | The block to execute when the query completes. |

videoForwarding

▸ videoForwarding(options: VideoForwardingOptions, completion: ((_ error: NSError?) -> Void)?)

Sets the video forwarding functionality for the local participant. The method allows:

- Setting the maximum number of video streams that may be transmitted to the local participant

- Prioritizing specific participants' video streams that need to be transmitted to the local participant

- Changing the video forwarding strategy that defines how the SDK should select conference participants whose videos will be received by the local participant

This method is available only on SDK 3.6 and later and is not supported for mixed listeners.

Parameters:

| Name | Type | Default | Description |

|---|---|---|---|

options | VideoForwardingOptions | - | The video forwarding options. |

completion | ((_ error: NSError?) -> Void)? | nil | The block to execute when the query completes. |

Updated 11 months ago