Spatial Chat

What is spatial chat?

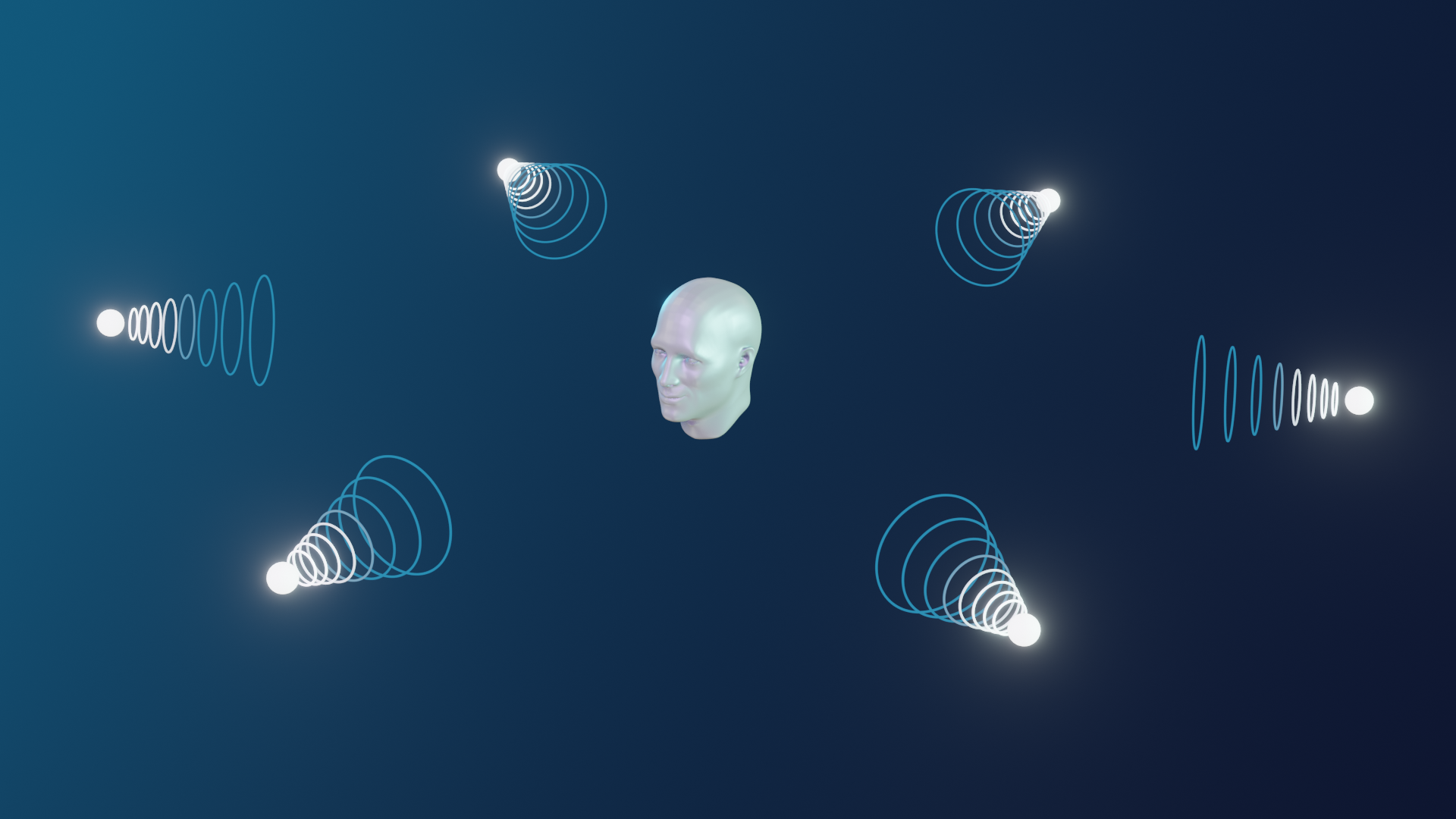

Dolby.io Spatial Chat allows you to place conference participants spatially in a 3D rendered audio scene and hear the audio from the participants rendered at the given locations. This feature replicates audio conditions similar to real conference room conditions to make virtual meetings more natural and realistic. The application configures the audio scene and then gives the positions for the participants in the conference. The application can also change the position the scene is heard from in 3D space.

Spatial chat is supported on Dolby Voice Clients, Stereo Opus Clients, and Mono Opus Clients in Dolby Voice conferences. With Mono Opus Clients, participants receive mono sound but still hear the impact of positions, such as volume differences due to distance. It is useful in cases when hardware, a browser, or an operating system enforces mono. Mono Opus also allows conference participants with unilateral deafness or other asymmetric hearing impairments to not miss important sounds that occur only from one direction.

Styles of spatial chat

There are two different styles of spatial chat (Web, iOS, Android, C++, .NET) that you can use in different types of applications:

-

In the individual style, positions are not shared between participants, so each participant sets the positions of all participants and hears a unique spatial audio scene. This style is ideal for video conferencing where each participant sees a different layout of video tiles.

-

In the shared style, one spatial audio scene is shared by all participants who hear audio from their locations. This style is ideal for shared spaces, such as virtual trade shows or games.

While spatial chat can be used in many types of applications, this article discusses two use cases: standard video conferencing and a virtual space.

Individual spatial chat: video conferencing

In the individual spatial audio scene, each participant creates a unique scene by setting the positions for all participants. This way, each participant can hear a different spatial audio scene.

A video conferencing application would use spatial chat to map each participant's audio to the same location as their video on the screen. For example, if a participant’s video is shown to the left of the screen, the audio from that participant would be heard from the left. This is known as “Audio/Video congruence”.

The A/V congruent audio scene is set up by defining the rectangle that represents the scene in the application window. Normally this would be the rectangle that the video conference is being shown in. The participants’ positions are then expressed by the positions of their video tiles.

Tiles can be arranged in any way desired by the application developer, though some common examples might include:

- A grid view of equally sized tiles

- A grid view of different sized tiles

- A large main presenter tile with tiles for the rest of the participants in a line underneath

- A panel, where the tiles are arranged as if the participants were sitting at a panel table facing the audience

Shared spatial chat: virtual spaces

In a shared spatial audio scene, all participants contribute to the shared scene by setting their positions only. All participants are heard from the positions they set.

In a virtual space, the conference is often audio-only. Various presentation styles are available, such as a top-down 2D map or a style that resembles a 3D game. Each participant in the conference is shown in the space as an avatar. The audio for the other participants appears to come from the position shown for their avatar. Participants can move themselves around the space and see others move as well. The audio scene updates as the participants move. Virtual spaces can be applied to applications such as 2D or 3D games, trade shows, virtual museums, water cooler scenarios, etc.

Note: The Dolby.io Communications APIs currently do not support recording conferences in the shared spatial audio scenes.

Updated 11 months ago