Introduction to Streaming APIs

Overview of the Dolby.io Real-time Streaming APIs

Dolby.io Streaming APIs were created to make it easier to stream your high-value content at scale with ultra-low latency.

Deliver 4k video and audio streams to massive audiences while maintaining under half a second of latency anywhere in the world. With the scale, speed, and quality of the service, the Dolby.io Real-time Streaming APIs support a range of use cases including live events, sports betting, virtual auctions, remote production, and more.

Real-time Streaming

What is Real-time Streaming?

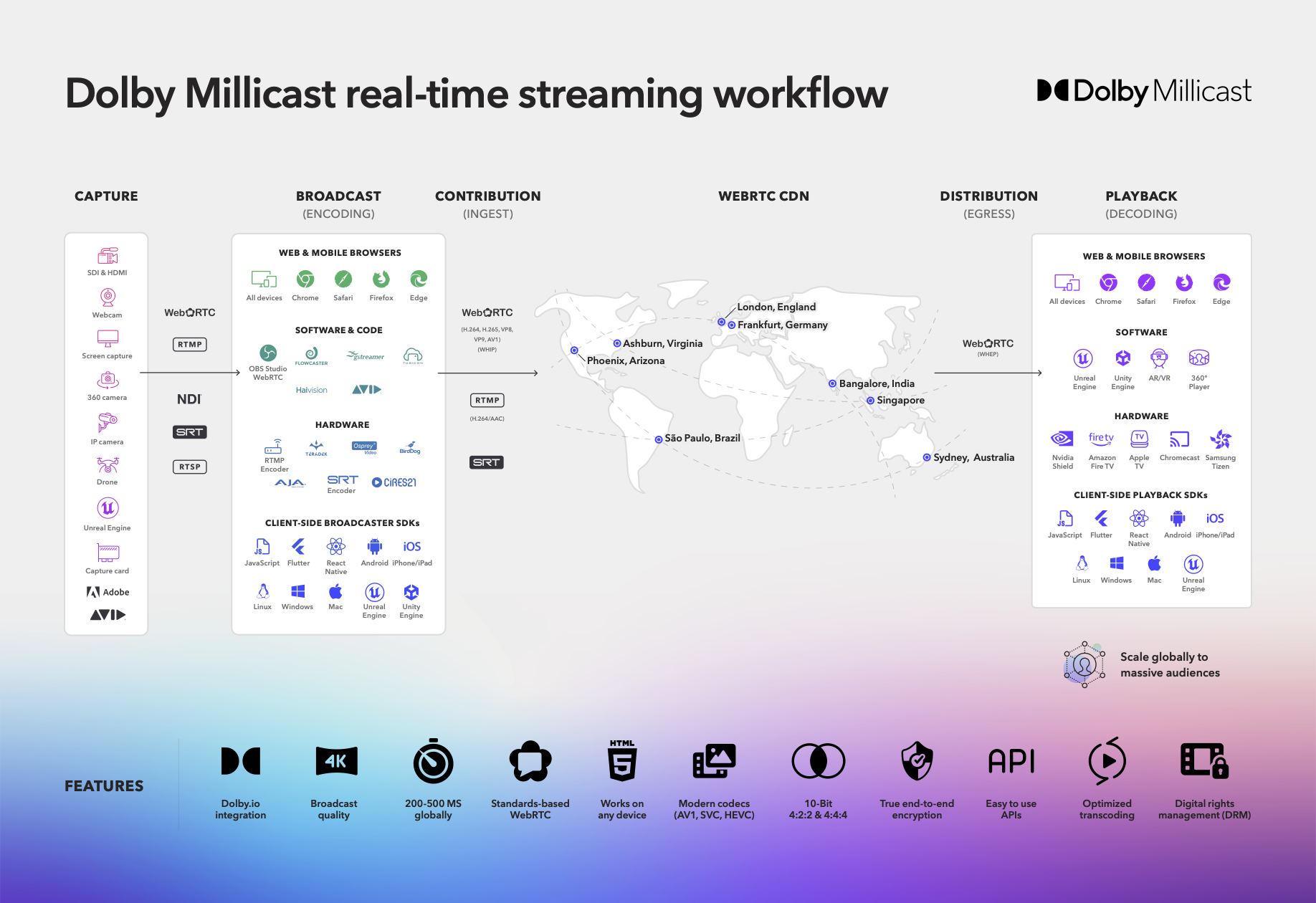

Low Latency Streaming has been available for a number of years to deliver media content over HTTP within about 10 seconds. With Real-time Streaming, we can deliver content glass to glass from capture, encoding it for broadcast, distributing it over a content delivery network so that an end-user can playback that experience globally at scale on average under 1 second!

This enables a wider range of use cases where precision matters for timely high-value streaming content.

How it works

Supporting many use cases, real-time streaming has components that support the required capabilities for Capture, Broadcast, Distribution, and Playback.

Capture streaming content

To capture content you need either a physical camera capturing the content, a virtual camera from within a game engine, or a source of content available via another content creation tools such as OBS or Adobe Premiere.

Many devices are able to capture a compatible media stream encoding:

✓ SDI & HDMI, Serial Digital Interface (SDI) and High-Definition Multimedia Interface (HDMI) connections are common across many professional cameras and capture devices.

✓ NDI, Network Device Interface, is a commonly used protocol for Video over IP that is supported by video mixers, capture cards, and other devices.

✓ RTSP, Real-time Streaming Protocol, is commonly supported in media streaming servers that capture and process video and audio feeds.

Check with your specific hardware provider for direct support of WebRTC or one of these common device interfaces.

Broadcast encoded media

Broadcasting content requires access to the public internet and encoding, which can be accomplished via the browser, software, hardware, and via the Dolby.io Client-side broadcaster SDKs.

The Dolby.io CDN can ingest streams encoded in a few main formats:

✓ WebRTC, an internet transfer protocol that supports video codecs H.264, H.265, VP8, VP9, AV1, and the Opus audio codec. Broad support is made possible through implementations of WebRTC HTTP Ingest Protocol (WHIP).

✓ SRT, a video transfer protocol that can be transmuxed to WebRTC via the Dolby.io CDN and supports H.264 video and AAC audio codecs.

✓ RTMP and RTMPs, internet transfer protocols that can be transmuxed to WebRTC via the Dolby.io CDN that supports only the H.264 video codec.

SRT and RTMP will automatically have AAC audio converted to Opus via the CDN

Distribution with WebRTC CDN

The Dolby.io Streaming CDN offers a range of server-side features that users can toggle and adjust via the REST APIs or the Dashboard to ensure distribution of streams is secure, stable, and scalable.

✓ Scalability to distribute content to large audiences across multiple regions in real-time.

✓ Stability with features like simulcast that provide redundancy and adaptability to maintain a good user experience across different network and device conditions while maintaining a high uptime and Quality of Experience (QoE).

✓ Security through features that protect your content by securing streams with subscriber tokens, self-signed tokens, allowing only specific origins, geo-blocking, and IP filtering.

Distribution of streaming content requires scalability, stability, and security along with a collection of robust features including stream recordings, multi-source streams, multi-bitrate delivery, backup publishing, stream syndication, and streaming analytics.

Playback streaming media

The final component of the streaming workflow is taking the stream, after it has been passed through the Dolby.io CDN, and playing it back to the end viewer. Similar to the broadcasting side, decoding and playback is supported via web and mobile browsers, software, hardware, and via client-side broadcast SDKs.

✓ Hosted streaming player with low-code drop-in support for many applications.

✓ Interactive playback for streams that support dynamic tracks, multiple layers, multiple views, and playback events.

✓ Support with Client SDKs for building custom playback viewers for Web, iOS, Android, React-Native, Flutter, Unity, Unreal, .NET, and desktop applications.

✓ Broad support is made possible through implementations of WebRTC HTTP Egress Protocol (WHEP).

✓ Preview streams with poster images and thumbnails.

✓ REST and GraphQL APIs that provide analytics for tracking individual streams and user bandwidth tracking for who is viewing a stream.

Updated 6 months ago